Ridge Regression is a type of linear regression that addresses multicollinearity (when independent variables are highly correlated) by applying a regularization technique. It is used when the data suffers from overfitting, meaning the model performs well on the training data but poorly on new, unseen data.

How Ridge Regression Works:

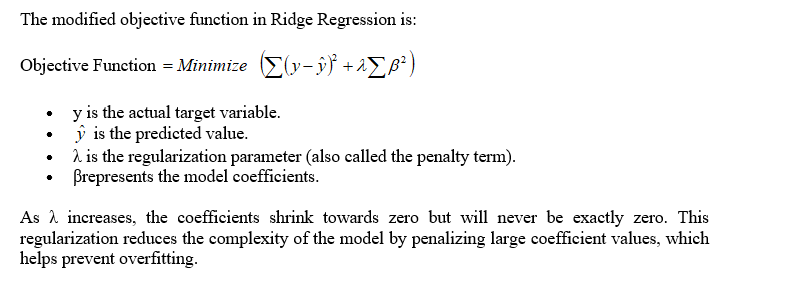

In ordinary linear regression, the goal is to minimize the sum of squared residuals (differences between observed and predicted values). However, in Ridge Regression, a penalty is added to the loss function in the form of the L2 regularization term, which is the sum of the squared values of the coefficients.

Key Characteristics of Ridge Regression:

- L2 Regularization: It adds the square of the magnitude of the coefficients as a penalty term to the loss function.

- Coefficient Shrinking: It shrinks the regression coefficients (but not to zero), making the model simpler and more robust.

- Bias-Variance Trade-off: By introducing some bias (via regularization), Ridge Regression reduces variance, leading to better generalization on unseen data.

Importance of Ridge Regression:

- Addresses Multicollinearity: In situations where predictor variables are highly correlated, Ridge Regression helps by reducing the impact of correlated features through regularization.

- Prevents Overfitting: By penalizing large coefficients, Ridge Regression reduces the model’s complexity, which helps in situations where the model fits the training data too well but fails to generalize to new data.

- Improved Prediction Accuracy: In high-dimensional data (when the number of predictors is close to or exceeds the number of observations), Ridge Regression often provides more reliable predictions than

Silivri su kaçağı tespiti Profesyonelce çalışıp su kaçağını nokta atışıyla buldular. https://blivebook.com/ustaelektrikci